There are indeed concerns about the current science publishing model, but until major changes in grant funding are incorporated, researchers will continue to lust after publications in high-tier journals.

Having had the honor of attending back-to-back lectures by Nobel awardees Dr James Rothman and Dr Randy Schekman at the recent American Society for Cell Biology meeting in New Orleans, it was Dr Rothman’s comments that struck the right chord – he specifically pointed out that the US National Institutes of Health (NIH) needs to allocate more money for basic research. Dr Rothman’s talk followed a lecture by Dr Jeremy Berg, former director of the NIH’s Institute for General Medical Science (NIGMS; the institute that promotes and funds basic research). In his lecture, Dr Berg noted that NIGMS receives only ~8% of the total NIH budget, yet about 60% of Nobel prizewinners are funded through this institute. Very telling, in my humble opinion.

The boycott of ‘super-journals’ with very high impact factors proposed by Nobel prizewinner Randy Sheckman misses the key point.

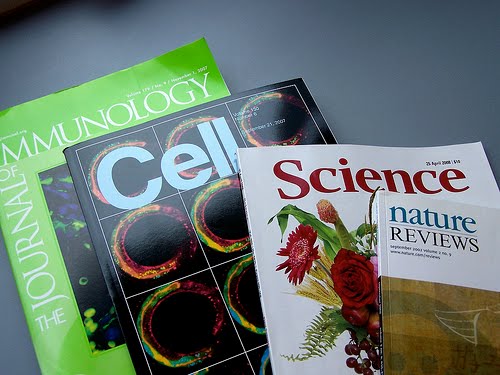

Dr Schekman also delivered an outstanding lecture, and he has recently made a case for changing the way in which science is published, here in the Guardian. Dr Schekman’s main beefs are with the overwhelming attention that scientists are paying to something known as the “impact factor”, and why this criterion is not a fair way to assess the merit or productivity of a researcher.

The impact factor is a number attributed to a journal that essentially quantifies the mean citations garnered by all of the papers published in that journal over time. It has long been recognised that there are serious concerns with such a system. For one, this is an average of all papers published in the journal, so a few highly cited papers considerably increase the overall impact factor of a journal, while many papers might not be cited at all.

Further complicating this method is that some journals publish reviews, perspectives, mini-reviews, commentaries, etc, and typically these tend to be very highly cited because they attract the attention of researchers outside a given field. On the other hand, other journals strictly publish research papers. And of course, some papers can also be highly cited, yet in a negative way. One such example is of the highly publicised Benveniste affair, in which it was claimed that water contains a memory of the antibodies that were diluted from it – raising cries of victory from homeopaths around the world.

So why has this impact factor become so established among today’s scientists? One reason is that the science itself is rapidly moving towards objective quantification – so there is a similar push to measure scientists’ productivity and careers in a similar manner. In years gone by, scientists would describe a phenotype – for example, a descriptive difference observed – and then provide examples of representative images to back their claims. Today, the gold standard has evolved to more than just show and tell, but providing actual numbers to back up the claims: graphs, tables and statistics. Overall, this is a good thing, as it forces researchers to use objective measures for evaluating scientific findings. But in other cases, the phenomenon may not have a measurable difference.

But there are other reasons why impact factors have become popular. Researchers are becoming squeezed by poor grant funding, bureaucracy and a host of other time-consuming responsibilities. Ideally, new faculty candidates should be examined by their scientific contributions and not by the journals in which they have published. But in the absence of time, impact factors have become an easy way to screen out candidates. This is wrong, but it won’t be easy to undo. The simple fact is that there are journals in which the peer review process is rigorous and thus the papers published can generally be trusted – and there are many other journals that are not worth the paper on which they are printed. Harsh? Perhaps, but also true.

I serve on a variety of editorial boards, and have reviewed scientific papers for many different journals. And I speak from experience when I say that some journals have non-existent standards. I have been asked to review manuscripts from such so-called peer reviewed journals, but when I recommended rejecting a paper for lack of controls and for claims by the authors that are either invalid, inaccurate or simply false, in some cases the editor went ahead and ultimately accepted the paper. Perhaps to collect the author’s publishing fee; I don’t know. In any case, from my perspective this rendered the review process useless, and I lost any respect for papers published in such a journal.

De facto, there is a journal hierarchy – and probably always will be. The question is how the system can be made better.

Some proponents of open access would basically abolish all journal distinctions. In this ideal world, everything should go up on the net – some even maintain that peer review is unnecessary. Although this is not the prevalent view, let me first explain why this model cannot work for biomedical sciences. Science today is highly specialised and scientists need to be able to build on published works – to trust that the peer review process has led to the publishing of reliable data. If anything and everything were to be published, scientists essentially could no longer use published data as the building blocks for continuing studies.

Researchers do not have the resources or time to repeat others’ experiments to test whether they are valid; and in many cases we rely on data coming from other fields – often data that we cannot easily evaluate on our own. As an example, my own cell biology research is often spurred on by new findings made by structural biologists. I can read and understand the conclusions drawn – and use them to test hypotheses concerning my research. But I would be unable to evaluate the actual x-ray data itself and decide if it’s convincing or even legitimate. That is the purpose of peer review.

However, the majority of those who seek to change the system of scientific publishing do not want to abolish peer review – in fact, a lot of the criticism is aimed at several of the most high-profile journals, in particular the trio of Cell, Science and Nature – considered by many to be the most influential journals for the careers of scientists. These journals are highly exclusive, and indeed send out only a fraction of the submitted manuscripts for peer review, as initial decisions are made by professional editors who work full-time for the journal. Criteria for acceptance – or even review in these journals – demands that the research papers address hot topics of “broad” interest to the research community. They are supposed to represent startling innovations or discoveries of high significance.

There are many solid journals that have outstanding peer review processes, but do not disqualify papers based on a lack of perceived appeal or considerations of whether the published paper will be highly cited. I strive to publish in such journals, not because of any impact factor attributed to them, but because I know that papers published in these journals are rigorously reviewed, and typically garner respect from others in the scientific community. Yes, there are always exceptions; a great paper could be published in a journal that has a poor review process, and occasionally lousy papers manage to filter through to the rigorously reviewed journals. But there is a clear hierarchy, and this is independent of impact factors.

The solution to the problem of the ramped-up “super-journals” is not trivial, and it is tied into our funding system in the US and in Europe. In reviewing grant proposals for the National Institutes of Health – the main source for funding of biomedical researchers in the US – there is simply not enough money to support science. With success rates dipping well below 10%, reviewers are forced to distinguish between outstanding grant proposals, knowing that only ~1/10 will be awarded funding. Since research topics vary widely, we reach a situation of comparing apples and oranges. And pineapples. Throw in mango, pears, peaches, papaya and even watermelon for good measure. So how does one compare? We come back to the “impact” – or more accurately – perceived impact. Reviewers are forced to make decisions based on their evaluation of the perceived impact of the research being proposed. And if one publishes in a super-journal with perceived high impact, does that not help make the case that one’s research is influential?

This is the crux of the matter: perceived impact is one of the most important criteria for funding, so unless researchers have a Nobel Prize – and are therefore immune to criticism of perceived impact – they will need to continue to strive to demonstrate the high impact of their studies.

I, too, signed and support DORA – the Declaration on Research Assessment – which states that scientists should be evaluated according to their scientific contributions, as opposed to an artificial and superficial numerical count of the impact factor of their publications. But a boycott of the “super-journals” with very high impact factors (Cell, Science and Nature), proposed by Dr Sheckman, misses the key point. I would therefore recommend to my fellow cell biologists and newly minted Nobel Laureate, Dr Schekman – for whom I have the greatest respect – that we first focus energies on securing sufficient funding to keep basic biomedical science afloat. I hope our outstanding Nobel winners will use their weighty influence and impact to lead the charge. Once we have managed to stem the damage to scientists’ careers, then it will be time to address the very complex issue of how to improve the science publication system.

Readers may submit manuscripts to publish at http://www.ijtemt.org (International Journal of Trends in Economics, Management & Technology) and receive global recognition with pissn & libraries associations.